Building Developer Tools

After spending a large part of my career building tools, I realized I had unconsciously followed a consistent framework for identifying and building developer tools. It seemed like a good idea to make it concrete – both for sharing and collecting feedback – leading to this series of posts. I like to spend at least half of my available time making it easier to do the work before actually setting about doing it. It naturally seemed like a good idea to capitalize on this trait.

For the sake of style and brevity, these posts are highly prescriptive. Remember that these are heuristics based on my own experience at a large tech company; with this in mind, you should make sure to apply these in your unique context.

With that caveat out of the way, I'd like to paraphrase Fred Brooks by saying: all computer scientists are toolsmiths. This leads me to believe that some of these ideas are generally applicable; after all, we build tools for communication, commerce, art, and just about everything else. Even though I'm writing from my point of view – as a Sofware Engineer – these heuristics should be helpful irrespective of your current hat: Product Manager, Designer, or something else entirely.

Index

You can read these posts in any order; I've repeated important advice.

- Leverage

Why you'd want to work on developer tools in the first place. - Types of Opportunities

Mental models for evaluating opportunities. - Identifying Opportunities

Finding car-shaped holes in a world full of horses. - A Checklist For a Well-Built Tool

Suggestions for thoughtfully crafted tools. - Effective Execution

Structuring your work in a way to get the most value. - Traps For Tool-Builders

Common anti-patterns that you should avoid. - Meaningful Measurements

Metrics are a double-edged sword; wield them with care. - Snapshots of Excellent Tools

Tools I find inspiring, complete with reasons why. - Books & Other Resources I've Found Useful

Everything from Tufte to Hamming. - Acknowledgments

Leverage

There are only so many hours to work, and you want to use them in a way that gets you the most value for your time and energy. One way to achieve that is become a force-multiplier and make everyone around you more effective by working on Developer Tools. Of course, the math only works out if anyone uses your tools in the first place.

A toolmaker succeeds as, and only as, the users of his tool succeed with his aid. – Fred Brooks, The Computer Scientist as Toolsmith

A model you shouldn't take too seriously

Being a toolsmith is only valuable if the incremental gain from working as a toolsmith is higher than directly working on the problem.

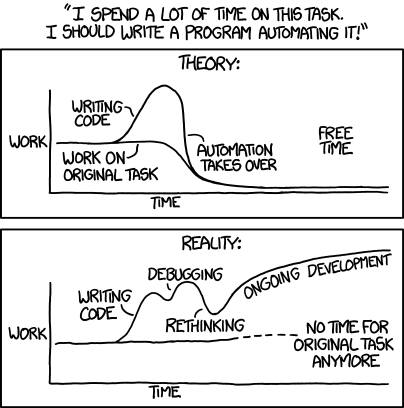

Paraphrasing Randall's model, automation can, occasionally, be helpful. I'm still trying to decide if I should be impressed or terrified that there's always an appropriate XKCD comic for everything I write. With significantly more clarity, fewer words, and better humor.

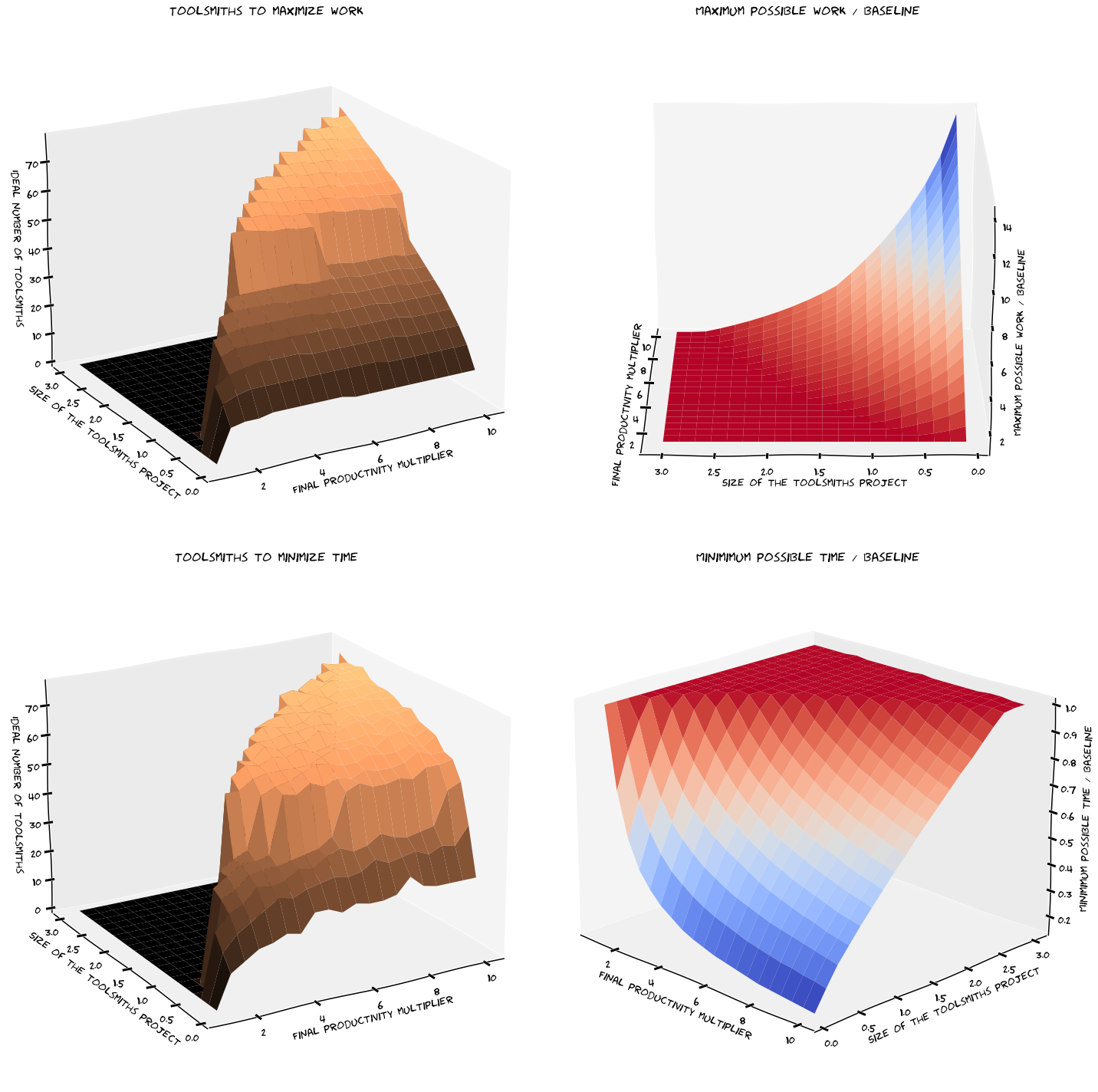

As heretical as it may sound, I tried to improve on this model. Varying both the size and value of the toolsmiths' work defines an envelope where toolsmiths can be very effective – or completely useless.

The complete underlying model is on DeepNote (a fairly promising new notebook platform). A summary:

Consider a team with a hundred engineers; think about

- the total impact you think toolsmiths can have

- how much work do toolsmiths need to do to achieve it (compared to the actual result)

Choosing to maximize the work done or minimize the time taken results in an optimum number of toolsmiths: ranging from 0 (if they're unlikely to have any meaningful effect) to 70 (if they could potentially 10x all the other engineers). Under ideal circumstances, you can get an order of magnitude more work done – or halve the time to complete your initial project.

Choosing appropriate points along the axis is hard, of course: particularly if you decide you want to consider the company's lifetime as the actual project. You can choose an appropriate "productivity multiplier" based on how much opportunity exists.

It wouldn't be engineering if there weren't any trade-offs. In this case: a team including toolsmiths will initially be slower than one without, but it will catch up and leapfrog the other team as the toolsmiths continuously improve and refine the processes.

If you believe that your company has too few toolsmiths: you should have an excellent opportunity to have an outsized impact quickly.

As a career

I would generally recommend working on Developer tools if you enjoy understanding the nitty-gritty of systems programming, programming languages and still enjoy owning a product. I've often had a chance to understand systems and write complex tree traversals for visualizations. You also have the delightful opportunity to be your own customer, which means your intuition often guides you in the right direction. Yes, sometimes you do get a chance to use those interview question solutions.

It's also highly satisfying to see people move faster and solve previously impossible problems because of your tools. Given the sheer amount of improvement possible, working on useful tools tends to work out career-wise as well.

There are downsides, of course. Toolsmiths are at least one step removed from the actual work that's going on – it can be hard to explain what we built to anyone without context (or violating NDAs). Depending on how enlightened your management is, your team might also be severely underfunded for what it needs to accomplish, mainly because the effect of good tools isn't generally easy to measure.

Over the larger arc of your career, I'd recommend switching between roles every so often: build a product, build tools to make that experience better; go back and continue building the product; and repeat. You'll become a more rounded and effective engineer once you have experience across both sides. It's not a completely binary decision either; you could always split your time and play toolsmith for a part of the week.

Finally, if you have senior anywhere in your title or job description: an implicit part of your job is to make the people around you more effective. Diving into – and fixing – tools should be a meaningful use of your time.

Types of Opportunities

As a toolsmith, I like to break down opportunities into three categories:

- tools that let you do what you couldn't do

- tools that let you do what you already do, but better (faster, easier)

- tools that change how you work

Each of these can be extremely valuable, depending on the circumstances. Having these in place often makes it easier reason about the tools: particularly around execution and prioritization. I'll start by diving into these categories and then talk about how I prioritize between them.

Enabling new, previously impossible work

These tend to be the tools that customers most appreciate, and at the same time, the most exciting to build. It's also extremely rare to get these opportunities – make the most of the ones you find.

Some examples include the ability to inspect the state of programs: record/replay debuggers; fleet-wide telemetry that tracks the lifetime of requests and allows observing global behaviors; easy access to eBPF-like instrumentation to precisely understand what's happening inside the kernel.

I also consider completely automating an existing workflow a part of this bucket: removing the need for human toil also opens up brand new capabilities both for the problem being solved and allows for much more meta-analysis.

Similarly, if you can speed up work by several orders of magnitude, you completely change its nature. Consider speeding up query execution from one day to 30 seconds – people will start doing quick, iterative exploratory queries to find the answers they need; instead of spending hours or days planning them out upfront. Which, in turn, leads to significantly faster and better work.

Improving existing workflows

Customers and toolsmiths most commonly identify this type of opportunity: no one likes to wait for their computer to do something. It's also remarkably easy to justify, validate, and measure, which makes it particularly execution-friendly. Almost no one.

There's almost always an opportunity for making things go faster: you could speed up builds, make pages load faster, speed up CLI start times, or even throw money at a problem and get everyone faster machines.

Similarly, you can also spend time making things easier: build affordance into complex tools, add documentation, tooltips, improve the design and user experience – let them onboard without reading a dense manual. You can also truly unlock the potential of a tool from the previous category by making it accessible.

Consolidating tools to make it easier to complete a task is another common category that falls into this bucket: making it easier to continue a job from one tool to the next or simply merging them into a single tool. I strongly prefer composable tools to giant monoliths.

Changing engineer behavior

Often enough, certain habits would be valuable for engineers to have; for improving code quality, following best practices, avoiding brittle behavior, etc.

Tools can guide people gently in these cases: linters, smart autocompletes, automated tests that guarantee broken code can't ship are a few examples. Tools that can track data provenance and automatically check the health of the data, tools that look for sensitive strings in logs.

Occasionally, you can even try and use these to slowly guide the culture of the company guide people towards behavior you want to encourage. You could push for better quality meetings by explicitly requiring an agenda and providing templates for effective meetings; or improve the diff review process by adding templates for creating commits with checklists for code review.

Prioritization

There's nothing as valuable as enabling otherwise impossible work. Even barely usable – but working tools of this category – tend to get a lot of adoption and use. Accordingly, I tend to prioritize these the highest for immediate impact, shipping a functional MVP to unblock engineers quickly and then iterating to make them more valuable and usable.

And then there's everything else that you could do: UX, quality of life, or speed up people along their way. Making powerful tools accessible to more engineers, eliminating – or reducing – the time people spend staring at their screen and waiting for results is always valuable.

Tools that help build behaviors tend to be outside the standard prioritization cycle: either you need to implement them quickly to respond to major outages and external requirements or bake them into your organization and see them change behavior over long periods.

Identifying Opportunities

Figuring out what to work on – and even worse, prioritizing it – can be extremely hard given a broad mandate to improve developer efficiency. There are often several conflicting signals: some obviously broken workflows, loud – and repeated complaints, and suggestions – from the engineers, and occasionally just the observation that similar teams seem to be able to work much faster.

Some of the approaches I use to explore the world include:

Become the customer and do the work

The best way to build customer empathy is to be the customer: you develop a strong intuition around what's important, what's fluff, and what's possible.

And when you're developing for yourself, when you are the customer, you've got such a crisp idea of what to do. And I always believe that we do our best work when we are our own customers.

As a developer, occasionally, you'll be lucky enough to be a customer already; if you aren't – become the customer. Pick a minor project – something the customers would use to ramp up a junior engineer – and execute to the best of your ability.

Working through the problem will also expose you to all the constraints faced by your customer engineers. It can also be humbling to realize that the tools you spend so much time building are a tiny part of their day and that they have very little time – or energy – to engage with the tools you build. Which might also be a hint to focus on different problems or tools.

Doing this needs some finesse and air cover from management: it takes a significant amount of time to ramp up, and the toolsmiths involved will not necessarily be very productive. Make it safe for your toolsmiths to experiment, with expectations around building and sharing understanding around the customers' problems. I'll write a whole separate note around embedding and making the most of the process someday.

You need to have buy-in from your customers; that helps ensure your toolsmiths get meaningful work – and not make-work. Embedding also enables you to build excellent long-term relationships.

Ask directly about the most significant problems

There are some shortcuts if you can't embed or have too many different sets of customers. The first and obvious one is observing and interviewing customers. There's a warning I must give you first: focus on the problems and not the solutions they ask for.

It's often tempting – and sometimes necessary – to build precisely what customers ask for (particularly for building relationships and trust). Unfortunately, most immediate requests are for better horses and not for cars. Customers are trying to get their jobs done as fast as possible – they aren't necessarily thinking about what's potentially viable, building a bigger picture from disparate requests across different sets of customers, or looking for order of magnitude improvements in workflows.

That's your job.

Respect & carefully understand existing solutions

More often than not, your customers will have ad hoc scripts, half-baked runbooks, and other forms of duct tape lying around. Treat these like gold – they will often do at least ~80% of what you want to accomplish, implemented in ~5% of the time with minimal resources.

Learn from these tools and potentially use them as starting points for integrating your own (slowly subsuming them in the process). Occasionally, you might have significantly better ways to tackle the problem – make sure it handles all the cases the duct tape is for, or no one will adopt your solutions.

Collect just enough data

Data is valuable but also potentially misleading. Having direct access to customers for direct observation and collecting anecdotes will get you very far. Use data to understand the state of the world and potentially determine the most impactful problems.

At the same time, don't over-index on the metrics: I talk about this more deeply elsewhere, but blindly relying on metrics like retention for tooling can be highly misleading.

Find inspiration around you

With the sheer number of operating systems, languages, and sets of tools available, there's almost always "competition" of some sort you can take inspiration from. If you're improving Android Studio, look at how XCode does things. If you're working on Vim, learn from Emacs.

Sources of inspiration don't need to be current in time. I often find a wealth of valuable information looking at languages that aren't as popular today; for example, Smalltalk gives me an excellent idea of what a truly powerful REPL – or Jupyter Notebook – could achieve.

Keeping current with experiments, research, papers, and the latest in technology can be extremely helpful. It also gives you a valid excuse to browse Hacker News at work: you're doing research.

A Checklist For a Well-Built Tool

Building anything involves trade-offs: this particular checklist describes the direction I try to push trade-offs towards, to the best of my ability. I strongly recommend designing and building your tools carefully and thoughtfully – the craft applied will shine through.

Make it as simple as possible

As toolsmiths, we have way too much space in our heads for the tools we use; we're constantly evaluating how and why they work. And that we could have done a much better job. Obviously.

Engineers trying to do their day jobs have a significantly different perspective: they encounter dozens of tools every day. Each of these tools is yet another annoyance they need to navigate as quickly as possible. Several web apps, some form of IDE, source control, some compilation/build mechanism – just to get started. Then there'll be tools for deployment, data management, and actual business logic.

Make usable tools that use the least amount of energy and time to operate correctly. Aim to build a minimal and functional user experience and lightweight design with minimal cognitive overhead. In other words, make sure you add affordance to your tools.

Make it magical but also transparent

I greatly appreciate tools that configure themselves to my circumstances automatically and tells me the best options – tools that push me into the pit of success. At the same time – they should also explain why these options are the best.

Make tools that are amazing even if I want to do something you didn't anticipate. Provide information around why it's taking certain decisions – potentially with inline logs, tooltips, or even an easily accessible "run log" that captures everything that happened. Give users the freedom to choose the (hopefully) rare instances they need to go against defaults.

Make the common workflows easy

Work that needs to be done frequently, particularly by new users, should be trivial and intuitive; without overwhelming them. Things should just work – and be magical. Push your n00bs into the pit of success, and teach them along the way.

Structure tools in a way that layers complexity: everyday work should require minimal effort spent learning how to do it.

Make the rare workflows possible

Leave space for power users who need to do complex work: don't neuter the tool to keep it easy for new users. It's surprisingly easy to accidentally hobble tools too much with the idea of avoiding complexity.

Leave space for people to completely override the tool's decisions if they're willing to accept the consequences of doing so, which is why transparency plays a vital role. Respect your power users as the ultimate experts and let them do what they need to.

Enable customization

One of the balances tool builders need to strike is between building a generic but not particularly useful tool – or one that satisfies the very custom needs of every single team and looks like an aeroplane cockpit.

Instead of painfully trying to navigate this tight-rope with unsatisfying compromises everywhere, make it possible for users to customize tools to their satisfaction. Your customers are engineers too, and you should let them use their skills to maximize the value they get.

As always, there are trade-offs with this approach – particularly around ownership when things break and the added cost of maintaining a reliable interface for customers. You can mitigate this partially by sandboxing customer-specific changes to each customer – they're allowed to make the tool their own but can't break it for anyone else.

Enable composition

Command-line tools almost get this for free, thanks to pipes, shell scripting, and the ability to quickly duct tape together text parsing. Explicitly supporting composition – with JSON outputs, clean interfaces, or treating flags as an explicit API – makes tools far more valuable and useful.

UIs, unfortunately, have generally been terrible in terms of easy composition. You still have options – make it embeddable to allow reuse, allow accessing the underlying data with an API, explore options like Jupyter Notebooks – or Retool; keep the UI decoupled from the inputs and outputs, making it easier to compose.

Some tools also allow for both a CLI / usable library and a browser-based interface, which helps significantly. Ensure the two implementations are tied together cleanly, and ideally, each interface coaches you on achieving the same results using its counterpart.

Persist navigation state

It often takes several clicks to find the right view in a UI, making shareable and persisted state extremely important. Good UIs make it trivially easy to bookmark a particular state – to return to or to share.

If you frequently find instructions that ask users to follow several steps walking through a UI, you probably need to make it possible to link to different desired states directly.

For a CLI, an equivalent is helpfully printing out a direct command – or generate a file – after a user navigates through many menus and chooses options. A good example is how menuconfig complements other more direct mechanisms.

Fast, or at least asynchronous

Fairly self-explanatory – the whole point of building tools is to make people more effective, and a painfully slow tool that eats into everyone's time is hardly valuable (except for when the alternatives are even worse).

Never make customers explicitly wait for results – allow them to exit and return when convenient, possibly with a notification on completion. The last thing I want is to spend hours waiting and then lose all progress because my browser tab crashed.

If it's prohibitively costly to be faster, try to hide the slow parts instead, possibly by pre-computing and caching results.

Reliable & Trustworthy

Broken tools lead to unhappy engineers, particularly those investigating something complex. You never want to be in a position where you're not quite sure if you've identified a problem or it's simply an artifact of a tool you're currently using.

It's unfortunately easy to have subtle yet extremely troublesome problems. For

example, a tool I wrote to render JSON would silently mangle large

numbers beyond Number.MAX_SAFE_INTEGER and corrupt large numeric IDs.

Identify these and fix them as soon as possible; better yet, anticipate the ones that could break the central value of the tool and solve them before release.

Make it Work, Make it Right, Make it Fast – Kent Beck

Effective Execution

tl;dr; make significant improvements with small changes while constantly collecting feedback. I believe this is yet another bastardized version of agile, but this one is mine.

Add value immediately

The tools we build are an end to a means: improving the efficiency of people working on the actual problem – and you should always have a strong bias towards immediate improvement.

Building value from the very first engagement helps give you a better sense of the problem space, builds trust with your customers – and motivates them to make time to engage with you and help you ramp up in a new domain.

You also immediately start changing the curve for solving problems, which has positive knock-on effects even if everything else fails.

Improve what people already use

Instead of building an entirely new tool that people then have to

- find out about

- be convinced of adopting

- and then work through shortcomings or bugs or missing features

– make it easier on yourself and your customers.

Improve the tool they use the most: particularly in case of a proliferation problem, choose the most valuable tool and start building on top of it.

While working in a brownfield isn't as pleasant or fast as a greenfield project, it has a lot of advantages:

- Customers are going to start using your work immediately and giving feedback (often loudly) – and you'll immediately find out if it's valuable or not.

- Working directly on the existing solution means that you'll account for strange edge cases upfront instead of reaching 90% completion and then realizing you still have another 90% to go.

- Your customers are already in place because you decided to go meet them where they are: you don't need to do significant evangelization or education.

One argument I hear often is that brownfield development can constrain the solution and miss out on paradigm-changing efforts. I almost always argue that we can do both: building a path that lets us slowly stack incremental improvements till we have something an order of magnitude better, with a gradual ramp on for the customers.

Of course, this will be slower than doing a greenfield project: but you're much more likely to succeed, and you've been speeding up other engineers throughout this process – significantly increasing the payoff.

Build simple, composable, and customizable tools

Our customers are developers: they're familiar with programming and the stuff that powers these tools in the first place. Freeing them to customize the tools to their particular problems will enable much more satisfaction and productivity.

Allowing end-users to customize tools for themselves helps break free of several painful constraints and design decisions that otherwise come into play. There are often features that happen to be extremely valuable for a tiny subset of people and obscure and utterly unusable for everyone else. Allowing that set of people to build and run that feature just for themselves completely sidesteps that problem. Keep the core tool composable to make it easy for customers to build – and maintain – workflows they want.

Keep the core of your tool simple: which will help with reliability, maintenance, and speed.

Maximize leverage

Make sure that time spent by toolsmiths acts as a true force multiplier for the rest of your engineers: directly trading off a toolsmiths time 1:1 for an engineer's time is most often a wasted opportunity.

Getting the most value from your time is pretty much the whole point of this series, but I wanted to remind you to make sure you keep this idea in the back of your head while making any decisions.

Traps For Tool-Builders

There are some anti-patterns that I often see tool builders fall into:

Fixating on tools instead of problems

The tools we build are an end to the means and not an end in themselves: focus on the problems being solved instead. A symptom is a poorly functional Frankenstein swiss army knife that started its existence as a hammer.

Solving this behavior is somewhat tricky: you want to incentivize your tools teams to take good care of the tools they build without causing tunnel vision.

One approach is to have part of your team responsible purely for the tool itself, while a different part works towards new solutions for problems; with goals and incentives aligned appropriately.

Assuming your customers care about tools

Most of your customers don't spend their days thinking about the tools they use, the underlying complexity, or how much their lives could be better with nicer tooling.

Instead, they're trying to get their jobs done: shipping products, building infrastructure, training models, etc. Each tool is one of the hundreds they encounter every day, and they're generally not thinking about it as they go about their work.

There are two significant consequences:

- Too much subtlety in a tool will backfire: it should be simple, obvious, and direct. Don't take up more space in your customer's brain than you need to.

- Be careful of direct asks from customers: understand their problem deeply, but consider all solutions instead of only the ones they recommend. Often enough, they don't have a sense of what's possible with good tools, but you do.

Starting by consolidating all the things

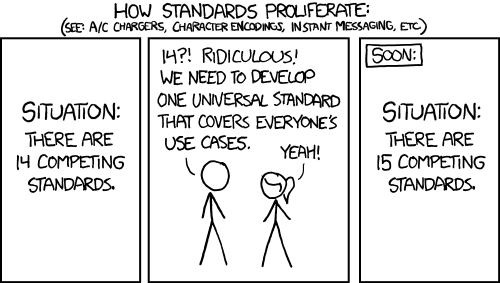

The most observable problem is generally an over-proliferation of tools people need to use in their day-to-day jobs. The solution is to often invent the n+1th iteration that inevitably does only ~90% of what the original tools did, and generally imperfectly with less flexibility.

XKCD captures this perfectly: standards, tools, processes, books; this is one pattern that repeats again and again.

I have a couple of recommendations to avoid this particular trap:

- Make sure you identify the actual pain points before quixotically tilting towards the windmills of One Browser Tab and stuffing a lot of workflows into a kitchen-sink equivalent. Prioritize these and actually speed up people.

- There will be some places where consolidation does make significant sense: particularly if a lot of information needs to move between tools and is manually copy/pasted. Prioritize interlocked/coupled systems, allowing for smoother workflows.

- When you do work on tool consolidation, choose the least broken and most comprehensive existing tool and improve it in place. Your changes will get validated in real-time, and you'll work with full awareness of all the complex edge conditions (particularly the sneaky ones that tend to derail the best-run projects).

- Coupling tools strongly leads to fewer options: try to make different tools compose smoothly and keep them flexible to maximize the value they provide.

Making things worse without realizing it

The downside of having so much leverage is that you also can make things significantly more painful for your fellow developers.

In more obvious ways: breaking or slowing down tools will have significant consequences for the happiness and productivity of everyone around you.

In more insidious ways: simply changing existing workflows comes with a high cost that generally doesn't bubble up very obviously. Any change to an existing workflow will come with a price to your current customers, particularly the power users. Be intentional about what you change, and always try to do it in a backward-compatible way.

You're almost certainly going to be surprised at the different workflows people have adopted around your tools.

Prioritizing form over function

A trap I repeatedly fall into is unconsciously prioritizing a pixel-perfect layout instead of building a valuable tool.

If a tool can get the job done, people will use it regardless of a dated, painful UI. If it can't get the job done, people will not use it irrespective of how polished it looks.

In a perfect world, aim for both polish and features; if you have to drop one, drop the polish. Do remember that a functional but unusable tool doesn't get the job done either. I never said this would be easy.

Iterating too slowly

If your tools-teams need tools-teams, you might need to reconsider. Large teams will be more likely to build more complex solutions with far less leverage than smaller, more focused teams.

As always, this is a balance to maintain: too small a team will self-destruct with burnout; too large a group will collapse under its weight.

Just make sure that you can adapt to the changing world – and your customer's needs – quickly. Tools should improve much faster than the final product being built. Building something that would have been useful six months ago is worse than not building anything at all.

Overwhelming your customers

Two insidious traps to avoid while interacting with customers:

- Don't rely on a game of telephone to understand your customers' needs. More often than not, the customers are engineers you work with – talk to them directly and make sure you truly understand the problems they need to be solved.

- Don't ask customers too many questions either: they still need to get their jobs done. Be disciplined about how much of your customers' time you use; document everything you learn to avoid repeatedly asking the same questions.

Avoiding domain-specific knowledge

A typical behavior I see in toolsmiths is that they avoid going deep into the domain they're building tools for, often staying just at the fringes and relying on customer reports to figure out if their work is valuable.

Please don't do this: I've been this person a couple of times in the past, and I've inevitably left a lot of impact lying on the table because I didn't realize how useful some features would have been (instead of polishing UI or taking customers at face value). Primarily because of a lack of time and not by choice.

You can short circuit this process by hiring engineers with domain knowledge to help build out your tools – even if they're only on loan from your customers. Time spent deep in the domain doing the work pays for itself very quickly.

Ignoring the surrounding systems and context

The constraints and options available to an internal tools team are incredibly different from those available to a company building developer tools for enterprise customers. It's generally not a good idea to copy/paste approaches – particularly around identifying and releasing products – directly.

Make sure you make the most of your environment: an internal tools team can ship much more rapidly, deeply understand their customers, and build significantly more bespoke tooling. Tooling companies can solve much broader problems with far more resources and options to make the best tool possible.

Assuming there's one ideal way to do things

People think in different ways: everyone has their approach to solving problems – and different sets of tools they prefer to use. Assuming that there's precisely one perfect solution for everything will overly constrain your solutions – or make them impossible to implement.

Building composable, extensible tools help navigate this better: for example, the Language Server Protocol brings excellent tools into all editors. Building LSPs avoids constraining the choice of IDE (which tends to be highly personal) but still helps add value for all engineers.

Believing that tools can replace thinking

There's only so much that tools can do: they can't replace someone actively and carefully thinking about the problem at hand. Don't expect them to – and don't set expectations that they can – to avoid

Meaningful Measurements

tl;dr; Don't use metrics as a crutch to avoid thinking and understanding.

Metrics are often considered the only way to observe and validate success, making them incredibly important to get right. I have a love-hate relationship with metrics: I find them useful as an proxy towards estimating success, but metrics treated as an end in and of themselves lead to pathological behavior.

So far into my career, I still prefer meaningful qualitative explanations to multiple abstract and obscure metrics: one of these implies proper understanding, the other one not so much.

Metrics are a map, not the territory

Before you invest a lot in metrics – particularly those aimed at measuring developer productivity – spend some time reading about state-of-the-art on the subject. You'll find that's it's tough to get clear answers around what's better – and you probably don't have the time to research the best ways to evaluate engineering productivity.

Metrics, in general, are a reduction of the world into something simpler that is easier to grasp, track and coordinate on. Never assume that instrumentation represents the world entirely – nor incentivize others to do so.

Retention metrics measure the problem and not the solution

Before you set goals around increasing your tool's retention metrics, check to see any alternative approaches.

Your coworkers are generally highly motivated, incentivized people. If there's only one way to achieve their goals – and that's through your tool – then they'll suffer through it regardless of quality.

Accordingly, use high retention as a good sign that the problem is essential to the business; and generally doesn't reflect on the quality of the tool itself.

Don't rely on usage statistics to dismiss solutions

Conversely, if people aren't currently using your tools, it can be for two reasons: either it's not part of their daily workflow, or the tool in question is not particularly well built. But it could still be essential when it's needed.

It's worth looking into the lack of use carefully; often enough, low usage statistics hide valuable opportunities, especially if there are no convincing qualitative explanations.

Set goals on business outcomes instead of tools

The argument the book How to Measure Anything makes against unmeasurable work is that there is always some change in the world you want to achieve. Measure that as your metric instead of the otherwise intangible or hard to quantify changes you're making.

Some positives: it'll also keep you and your team focused on the actual problem instead of treating the tools as ends, and moving metrics is guaranteed to translate into meaningful victories. That's not true at all for tool– or execution– specific metrics.

On the flip side, attribution towards "how much value your specific tool added" can be much more challenging. Hopefully, people don't care too much about this, but if they do (and you can't convince them otherwise) then you could consider having some additional proxy metrics as an explicitly extremely tentative measure of progress, with caveats.

Complex metrics cost more than they're worth

Simple, easy-to-explain metrics are much more likely to point you in the right direction. Complex models are hard to validate, understand and execute on – and often end up costing a tremendous amount of energy (and several data scientists) to maintain and understand.

You're also unlikely to find out quickly if the metric breaks, which often has disastrous consequences: not unlike navigating with a broken compass. More complex metrics can also make it significantly more challenging to do any statistical analysis to confirm whether changes are significant or simply noise.

If you can't set goals on business metrics (previous point) for whatever reason, keep your tool metrics extremely obvious and direct. Some examples of simple numbers which are easy to compare and reason about include time spent waiting for a computer, release cadence, and crashes in production.

Do a sensitivity test

Be sure that your metrics catch actual changes in behavior. Test out a couple of scenarios you want to be sure your metrics capture by generating fake numbers corresponding to those situations and checking that they show up (and don't get lost in the noise).

For example, a metric that only shows significant changes if 50%+ of your users can't use the tool isn't very valuable: you'll have far louder and quicker signals (such as a mob carrying pitchforks complaining about the broken tool).

You might find value in looking at higher percentiles, outliers, and potentially playing with an HDR Histogram.

Always have qualitative explanations

Most importantly – you should always be able to explain – in words – why your metrics moved in a particular direction. Ideally, you can triangulate an approximation of the change from secondary sources as well.

If you can't explain the changes, you should stop trusting the metric and start digging into the instrumentation. Never celebrate metric-only victories that don't have a meaningful explanation. This is one of those rare times I feel comfortable giving unqualified advice.

Do investigate metric-only losses carefully: either your tools broke, your metric is faulty, your understanding of your metric is incorrect, or your instrumentation is buggy. Try to triangulate your observations with multiple sources of data.

Snapshots of Excellent Tools

There are many excellent tools out there that I often find myself thinking about as I work on and build tools; shaped by the ones I've had a chance to use and see. Obviously, this list is not exhaustive – and I have several blind spots around otherwise excellent tools (particularly those built by Microsoft and Apple).

I'll use this note to highlight what I think some tools got exceptionally correct:

eBPF

eBPF checks several boxes: it enables insights and modifications that used to be impossible, composes remarkably well to allow building several meta-tools, and is fully customizable (you write eBPF programs, and you can modify your code to support eBPF better with uprobes).

Emacs

Emacs's excellent extensibility is the core of the program. With primitives designed for editing, it's one of the simplest ways I can imagine to build an editor perfectly suited to you.

I know I have, and so have others.

VIM

Where Emacs is an extensible meta-program, Vim is a knife for editing text as efficiently as possible. Particularly the editing "language" to quickly navigate and change text: everything from macros to mnemonics. I greatly appreciate the speed and simplicity.

LogSeq

The newest addition to the set of tools I use: I appreciate the care taken to ensure the backing data is under my control; the ability to use Org-mode is terrific. But the reason I include it here is how well it lends itself to organically creating different systems for organizing information.

As well as Roam Research, Athens, and other tools. I've only used LogSeq aggressively and regularly

Suckless (dwm, st)

The simplicity of suckless tools also makes for lean, fast, and unsurprising tools. The code is far more compact than any equivalent tools, making failures easier to debug.

Rust Analyzer

The rust analyzer shifts compile-time feedback into editing and acts as a significant force multiplier: I can see types get reified as I write code, making it significantly more straightforward and faster to iterate.

GitHub

GitHub pays a lot of attention to make it much easier to get started: there's consistent documentation on what commands to run and how to run them at every step of the way.

IntelliJ IDE

IntelliJ gets a ridiculous amount of things right: everything is accessible by the keyboard, and there's a shortcut to list out all the commands you can run – excellent affordance.

Android Studio

Android Studio takes care of most of the boilerplate for Android, making it much easier to get started. Android has several peculiarities around how file organization and Studio helps smooth over a lot of them – establishing a pit of success for Android Developers who might otherwise waste obscene amounts of time simply setting up projects correctly.

Systrace

Android's Systrace is striking and straightforward; it's easy to use to visualize any traces and not just the ones generated by systrace. Several different systems use systrace for visualization, demonstrating how well it composes with other tools thanks to the simple trace format and decoupled UI.

Navigating inside a trace with a keyboard reminds me of playing a video game: WASD moves within and zooms into the trace. Finding out-of-the-box support for Dvorak with ",aoe" as alternatives made me very happy.

Chrome Web Inspector

The sheer interactivity and thoughtful design of the console are delightful. I particularly appreciate how well the developers handle logging large amounts of data: large objects and arrays are automatically shown with strategic "…" to prevent overwhelming the browser.

I've always found the web inspector – and Firebug, the OG predecessor – to be carefully designed by people who actively use it, and it shows in all the little behaviors.

Books & Other Resources I've Found Useful

There are several resources I've found invaluable while building tools over the years: this is a perenially incomplete snapshot that describes some of them.

For Inspiration

No list about building tools is complete without Douglas Engelbart's The Mother of All Demos (youtube). We have a long way to go.

Watch Inventing on Principle by Bret Vector for something more recent before you sit down to build something.

On Visualization

Good visualizations can make a tool; unreadable ones filled with chart junk can break it. Good visualizations are your first line of defense to simplify complex domains with a lot of data.

Edward Tufte's Visual Display of Quantitative Information is my favorite of all the books I've read on visualization. It's also an astonishing compendium of beautiful visualizations built by people over centuries.

On Usability

The Design of Everyday Things by Don Norman is excellent for building intuition around making usable tools with affordance. You want to develop tools that push people into a pit of success; read this book to learn from examples.

On Metrics

Douglas W. Hubbard's How to Measure Anything is an excellent way to figure out how to measure progress – and particularly figuring out how much you're willing to spend to get that measurement.

At the same time, I enjoyed this talk by Fred Kofman that identifies the pros and cons of locally vs. globally valuable metrics – and informs why I prefer to goal on global metrics.

It's also valuable to recognize the limitations of measuring software productivity. I found it enlightening to look through Making Software: What Really Works to understand how hard it is to measure productivity and how much care any attempts to do so require. I've only skimmed this book, but I wasn't particularly encouraged about the feasibility of measuring engineering productivity well.

On Building Software

Fred Brook's The Mythical Man-Month is one of my favorite books in this space; this book introduced me to the concept of a "Toolsmith" – and surgical software teams with different skill sets.

On Identifying Opportunities

Richard Hamming's The Art of Doing Science & Engineering is an excellent book for building good habits: choosing to work on something important, keeping up to date, and working towards the future.

Tools are generally introduced into a specific context and might not interact with the environment in the way you expect them to. Thinking in Systems by Donella Meadows is an excellent way to start thinking about how different systems might interact; Systemantics is a somewhat cynical-yet-accurate description of why your tools will almost certainly never work out the way you expect.

Acknowledgments

Thanks to Kent Beck, Aditya Athalye, Joe Thomas, and Lindsey W for patiently discussing several aspects of this series and giving their valuable feedback.

Thanks also go out, of course, to everyone I've worked with and learned from while building – and using – developer tools over the years!

Comments

Add your comments to this Twitter thread, or feel free to drop me an email.

1/ https://t.co/uXslEdmKgd

— Kunal Bhalla (@kunalbhalla) June 24, 2021

Overview, and some background about why I wrote this in the first place. pic.twitter.com/YuG5SMAX9h

Updates

- 2021-08-07: Fixed some mistakes. Thinking about v2.

- 2021-08-01: One final round of edits before sharing v1.

- 2021-07-30: More edits, starting with Leverage.

- 2021-07-21: Index edited by Lindsey W.

- 2021-07-20: Exercising Grammarly, one post at a time.

- 2021-07-19: Fixed several typos.

- 2021-07-17: Added a new model & acknowledgments, more polish.

- 2021-06-24: Some polish.

- 2021-06-22: More drafts on inspiring tools and resources.

- 2021-06-20: Added a draft around model measurement.

- 2021-06-19: Played around with models for personal productivity.

- 2021-06-18: Iterated on models to estimate the added value of a toolsmith.

- 2021-06-17: Added a draft around execution, as well as leverage.

- 2021-06-16: Added a draft around identifying opportunities.

- 2021-06-12: Added a draft around traps for tool builders.

- 2021-05-29: Added a draft on characteristics of well-built tools.

- 2021-05-27: Started visibly publishing drafts online.

- 2021-05-22: Revamped expLog to have a nice place to publish.

- 2021-01-xx: I started outlining this note after spending a lot of time working on, talking about, and giving feedback on developer tools.